Black Market Archive

From OMAPpedia

Week 1 -- Projects from 2/28/11 - 3/4/11

PandaMediaBox

- Description

- The PandaMediaBox will contian a 7 inch TouchPanel and used to play and control audio and video media. The goal is to play media from san or nas, IPTV, webradio, usb dvb sticks, usb storage devices, bluetooth or even a vdr. For Audio it will contain an integrated amplifier and for video the user can choose between the integrated 7 inch Panel or the HDMI output. Inputs are all done over the touchscreen, additional it will be possible to control via a webinterface and a secure api for e.g. smartphone applications.

- Time frame

- 3 Months for the first useable Beta, 6 Months to include all features in a stable version

- Background & work by project submitter/s

- Student of computer science, have done work for an embedded sensor network and bigger software projects.

- Wiki/URL Links

- not available till now

- Contact information

Helping interface for dependent people

- Description

- Hardware & Software

- Gesture/vocal interface to help person suffering from handicap (Blindness, paraplegia, quadriplegia) or elder peoples to keep independence:

- For different use schemes:

- - To locate: a speaking GPS telling location (street name) on request (button or speech recognition).

- - Read and tell (OCR and speech synthesis)

- - Speech recognition actuators

- - Gesture (eye movement or body movements) or speech commands for actuations

- - Status of remote or local sensors of equipped home

- - Communicate easily (voip and connected link thru wifi/GSM)

- Bringing all these features with the help of software:

- - Gesture integration, character read OCR: image sensor and image processing

- - Voice recognition / speech syntheses : audio processing

- - Actuators and sensor (Local : serial / Ethernet link; remote Bluetooth wifi)

- - Speech synthesis integrated for reading or locating

- - Communication VOIP (start, stop communication with predefined list of contact trigged by voice or gesture)

- I know all these functions already exist separately, but want to integrate all these features in one system with all the advantages of the pandaboard :

- - Small form factor

- - Low power consumption

- - Connectivity (video sensors, wifi Bluetooth…..)

- - Power for video and audio processing

- - Linux and OMAP Community

- - Get a fully integrated solution with one small board!!!

- - Pandas are peaceful and powerful bears!

- Time frame

- First hardware and software integration (within 6 month):

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - GPS (uart)

- - Webcam USB

- - Bluetooth sensor/actuator modules

- Software

- - Gesture recognition (hand, body movement) and set some linked action

- - Speech recognition and set some linked action

- - Speech synthesis

- - Actuator and sensors (got some Bluetooth/serial sensors and actuators)

- - Get street position with speech synthesis work

- Second hardware and software integration

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - Design of an extension board GPS (external module with uart), image sensor

- - Battery pack and case

- Software

- - Eye movement recognition and set some linked action

- - Develop a graphical interface for option settings for helpers

- - More integration with person environment (generally home for quadriplegic or old people)

- Background & work by project submitter/s:

- - Affected by persons suffering from handicap, elder peoples…

- - Already set up some helping electronic (sensors, actuators)

- - Want to develop a open community of helpers around pandaboard

- - Work in electronic engineering (software AND hardware !)

- - Developed electronic hardware devices (digital cameras, bluetooth sensors and actuators)

- - Got a master in signal (audio and image) processing: openCV is my best friend !

- - Developed linux driver for image sensors, PCI boards, LCD displays…

- - Got some gesture recognition working on my Ubuntu intel inside computer

- Wiki/URL Links

- Gesture (wayv) : [1]

- OpenCVwiki : [2]

- Speech recognition (cmu-sphinx) : [3]

- Text to speech (festival) : [4]

- GPS (navit) : [5]

- Contact information

Android In-Air Gesture User Interface with Pseudo-Holographic Display

- Description

- Adding an in-air gesture user interface and a pseudo-holographic display to the Android platform running on a Pandaboard. Finger or hand gestures will be detected by a vision sensor, either visible light or infrared, and the data will be sent to the Pandaboard either via wireless connection such as Bluetooth or via USB. The data will be processed to translate to a useful Android input method (IME). In pseudo-holographic display mode, the projector connected to the Pandaboard via HDMI will project to a pseudo-holographic setup that either uses the 360 degree light field display technique or the pyramic-shaped Pepper's ghost effect. Both the input and output processings requires intensive computing power and it will be possible with Pandaboard high speed symmetric multiprocessing together with the powerful graphics core and the multimedia accelerator. This project will be a glimpse into the future and hopefully will bring usefulness and conveniences to everyone in various scenario, only limited by imagination.

- Time frame

- 6 months

- Background & work by project submitter/s

- Multimedia University Faculty of Engineering lecturer for subjects such as Robotics & Automations, Embedded System Design and Engineering Mathematics.

- Android developer in the community CodeAndroid Malaysia focus on 3D, augmented reality and hardware such as robots. Giving presentations on Android in various national open source conferences and events

- Supervising various Final Year Projects since 2008 related to 3D, natural user interface, augmented reality and Android.

- Involved in research projects and publications related to Wiimote and multi-touch tabletop. Publication list at http://wenjiun.blogspot.com/search/label/Publication

- Wiki/URL Links

- Wiki page will be set up soon.

- A related blog post at http://wenjiun.blogspot.com/2011/03/android-in-air-gesture-user-interface.html

- Contact information

Albino Interactive Lamp

- Description

- The goal of the project codenamed "Albino" is to build an intelligent lamp/information device. The first iteration will take the form of a small tabletop device. It would be a cross between an art piece and a functional device. The central element of the device is a speaker (about 3 inches diameter) and a small microphone surrounded by 12 light capsules forming a circle. (very crude sketch here: http://dl.dropbox.com/u/149476/flamp.png) Each capsule contains an RGB LED and the extremity of the capsule acts as a button. The most basic use of the device could be as a clock. Since there are 12 capsules, it is easy to represent time. Touching the capsules could also set an alarm at the desired time. The form factor offers a lot of possibilities and the idea is to create different applications : clock, internet radio station, weather station, musical instrument tuner, beat box, voice memo recorder, memory game, event notifier (email, IM etc.) The project being fully open, people could build their own device and build applications and plugins. We believe the Pandaboard would be a great companion to this project. Our previous prototype used a WiFi Arduino which wasn't powerful enough to do all those things. The Pandaboard has built-in WiFi, soundcard, plenty of memory, furthermore, we believe we could interface with the electronics directly from the Pandaboard since it features GPIO and serial communications.

- Time frame

- 3 months. We would like to have this project ready around May 2011.

- Background & work by project submitter/s

- Studio Imaginaire is a company that focuses on open technologies mainly in the fields of arts and medias. We spent the last few years building an open-source multitouch table and participated actively in the community (http://nuigroup.com) while realizing the project. The Albino project is also part of a larger project called the Open Technology Cookshow. The goal of that project is to make a web show to promote the use of open technologies, the Albino would be our first project to be featured in the show. We would document the process of building the device and also provide some entertainment in the form of interviews with local artists and tinkerers. The core team for the Albino project consists of 5 people: Nathanaël Lécaudé as lead designer, Simon Emmanuel Roux as coordinator and web programmer, Eric Andrade as lighting specialist, Tomas Valencia as industrial designer and finally Carl Matteau-Pelletier as electronic engineer.

- Wiki/URL Links

- Our main site is located here : http://studioimaginaire.com

Our last project is documented on our blog : http://studioimaginaire.com/blog (some content may be only in French) A video of our first lamp prototype (that was featured on Make and Engadget) can be seen here :

- Contact information

Open-Source Aerial Imagery platform (targeting autonomous aerial vehicles)

- Description

- We plan on developing a open-source and easily hackable aerial imagery platform, targeting autonomous aerial vehicles. Recently there has been a huge explosion in growth in the Open Source UAV community, as well as a similar growth in the commercial viability of these platforms for geographic/ecological surveys, crisis management, emergency response, agriculture monitoring, filming and photography, and many more. Our platform will be relatively low-cost (especially compared to commercial solutions) and highlight the features of the OMAP and PandaBoard to excel in this application. (Onboard video camera, hardware-accelerated jpeg compression/decompression, high-speed ethernet, wireless connectivity, low-power, and more!)

- We will support controlling external digital cameras using a variety of protocols over the USB 2.0 hosts, the onboard camera support, and both at once. For storage we'll support efficient and secure wireless transfer to a ground station (based on the implementation we used to win our competition last year) while simultaneously creating a local backup on a USB harddrive or on the SD card. Finally we will use GIS (Geospatial Information Systems) software running that will allow users to select which areas of the ground they want imagery of, and have the platform trim out undesired (or overlapping) portions of images to maximize wireless throughput.

- Edit: I think this will mesh well with the rest of the Unmanned/Autonomous Aerial Vehicle projects already listed.

- Time frame

- 4-5 months, we plan on having this platform mission ready for the 2011 competition (see below).

- Background & work by project submitter/s

- Lead software developer and Imagery system designer for the NCSU Aerial Robotics Team. Last year (2010) NCSU Aerial Robotics got top marks and First Place at the international AUVSI Unmanned Aerial Systems competition. The competition is to develop the best mission-capable autonomous aerial imagery platform for quickly and efficiently finding targets and points of interest. We believe the pandaboard is perfectly suited to this application, as it is smaller, lighter, and more efficient than previous platforms (from us and other teams). Our team has experience with ARM development (Linux and FreeRTOS as well as plain EABI) in other aspects of our competition, including designing and building target boards, developing and debugging software, and live in-mission testing/evaluation.

- Wiki/URL Links

- The group's web page front is here. Our wiki page about the imagery system (and the pandaboard proposal) is here.

- Contact information

LMCE Panda Port

- Description

- Linux Media Center Edition (LMCE) is a package developed for kubuntu that aims to control all aspects of a home media environment including lighting and thermostat. Details of LMCE are found here [6]

- This project aims to use panda as a fully entitled orbiter (see link above for definition) to enable cost effective home control panels to be installed in any given room of a users house.

- Time frame

- 3 months

- Background & work by project submitter/s

- Developer for this project has worked 4 years on the TI Android team and currently developing audio features for Blaze.

- Wiki/URL Links

- TBD, will update when available

- Contact information

Autotender

- Description

- The Autotender will be a machine that can automatically created mixed alcoholic, or non-alcoholic, drinks. Drinks will be selectable from a graphical user interface. The machine will contain hundreds of preset drinks and even allow for custom drinks to be created on the fly by entering in the ratio of ingredients and selecting drink size. The project will also contain RFID technology allowing for an individuals drinking to be tracked and even compared to other individuals and even allow for things such as BAC estimation. RFID will also allow for easier drink dispensing (we all know how difficult GUIs get after a "few" drinks") by dispensing either the last drink selected or a preset set by user. More specific implementation details can be found at the project wiki page located at http://www.omappedia.org/wiki/Autotender

- Time frame

- 3 months to working prototype, 6 months to finished appealing design

- Background & work by project submitter/s

- My name is Shane Anderson and I am currently a Junior in Electrical Engineering at Western Kentucky University. I've worked on several projects including designing a line tracking robotic car, building and programming a robotic arm gripper to play chess, and more. I've also programmed video games for several devices including xbox360, android, and PC.

- Wiki/URL Links

- http://www.omappedia.org/wiki/Autotender

- Contact information

- or larry[.][.]edu

Hidippus Mystaceus

- Description

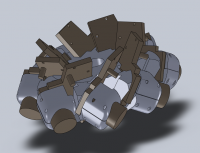

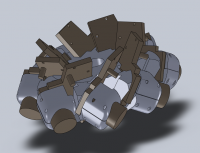

-

- Eight legged walking robot. Brain is accomplished using Pandaboard and world sensing with omnidirectional camera.

- Time frame

- This is recurring project with deadline set 2..4 months for to complete. In December we plan to use both robots on competition Robotex 2011.

- Background & work by project submitter/s

- Team members have strong IT and lesser robotics background. Members of Tartu University robotics lab.

- * does electronics and programming.

- * Kalle-Gustav Kruus is good in SolidWorks and mechanics.

- We are working on two robots. Because you ask for our background, I include details of both:

- * Robot with interchangeable driving platform (wheels or legs, not important) but packed with eight cameras feeding 8x60fps images to onboard PC. Images get processed in real time on NVIDIA GTS450 GPU.

-

- Old project was split into two because system with legs vibrates and this may destroy lot of expensive hardware. So, eight cameras plus GPU robot will be redone with wheels and legged robot comes next:

- * Legged robot with omnivision for Robotex 2011. We plan to use custom webcam with Sony 360 degrees lens hidden downside under body and lightweight computing system (Pandaboard!) to analyze input.

- We have hardware left from previous attempt:

-

- Our tests indicate, that Beagleboard C4 CPU is completely loaded when shooting single VGA image at 30fps and nothing left to process it after. Dual core Cortex A9 should perform better. Also Beagleboard lacks peripherals we need.

- Because of his physical properties (legs in front of view) robot is limited to see better image on stop. This makes it act like real animal - walk few steps, stop to make decicions, walk again. We expect this to be really funny side-effect.

- We publish as much information as possible, so anyone is free to reproduce anything from our work.

- Wiki/URL Links

- Old (slow etc) walking platform as we have it

- Robot preview (old type)

- Leg system in prototype phase

- Old SolidWorks design, etc http://digi.physic.ut.ee/mw/index.php/Spidertank

- Wiki where we just started to rebuild all information from old pages (old ones are inaccessible to outside world :() http://digi.physic.ut.ee/mw/index.php/Tiim_IT_Grupp

- Some more random photos about our past walker design in test:

- Lifting heavy weights

- --""--

- --""--

- Onboard computer box is empty. Testing cameras with laptop

- Just walking with wireless remote control

- Disassembled walker

- Contact information

Week 2 -- Projects from 3/7/11 - 3/11/11

In-Air Gesture User Interface

- Description

- The project aims to add an in-air gesture user interface to the embedded Linux platform running on Pandaboard. Hand gestures will be captured by a depth camera, and the data will be sent to the Pandaboard via USB. The data will be processed with the help of various Kinect open source projects to provide a gesture user input to the applications that will be developed, as listed below:

- Media player (hand gestures for playing, pausing, volume control), essentially a gesture TV

- Photo browser (zooming, rotating, swiping to next)

- Gaming (motion control)

- Augmented reality application (panning, selecting), utilizing the RGB capture of the depth camera

- 3D model/scene browser, which will incorporate head tracking

- Time frame

- 6 months

- Background & work by project submitter/s

- Multimedia University Faculty of Engineering lecturer for subjects such as Robotics & Automations, Embedded System Design and Engineering Mathematics.

- Android developer in the community CodeAndroid Malaysia focus on 3D, augmented reality and hardware such as robots. Giving presentations about Android in various national open source conferences and events.

- Supervising various Final Year Projects since 2008 related to 3D, natural user interface, augmented reality and Android.

- Involved in research projects and publications related to Wiimote and multi-touch tabletop.

- Wiki/URL Links

- Wiki page will be set up soon.

- A related blog post.

- Contact information

Glass Cockpit for Experimental Aircraft

- Description

Existing glass cockpits for experimental aircraft all have major deficiencies in their user interfaces, in my not so humble opinion. This project will generate an EADI (Electronic Attitude/Director Indicator), and will also incorporate engine instruments.

There are major shortcuts to make this project feasible and safe for flight. One is to buy an off the shelf ADAHRS (Air Data Attitude Heading Reference System), and there are many on the market, which will provide air data (airspeed, altitude, vertical speed), plus attitude data (pitch, roll, yaw, plus rates and accelerations), and a magentometer and a GPS. A second shortcut is to use an engine monitor from Grand Rapids because that monitor will do all of the A/D conversions and provide data in a serial output.

Remaining inputs are two serial ports for talking to radios, specifically a Garmin 430W and a Garmin SL-30, and to interface with dual concentric knobs as the only user interface control.

Futures (ha!) include Synthetic Vision, putting a computer-generated image of the ground on the background of the display. This is way cool in terms of glitz but limited in terms of utility. Also, it requires that the database be updated regularly. Another future is Enhanced Vision, using a low-light camera of some sort and putting that in the background. However, those cameras are expensive, and that would require both video in and out.

- Time frame

This project would take a good year to do. Because it is a safety of flight system, even with only one built, there must be a structured documentation program to cover all the bases.

- Background & work by project submitter/s

20+ years in Silicon Valley, including Software Lead on the Topo robot; six years at Apple; safety research at NASA Ames and Boeing; Airline Transport Pilot and Certificated Flight Instructor; former college professor teaching aviation safety; and lots more.

- Wiki/URL Links

20+ years in Silicon Valley, including Software Lead on the Topo robot; six years at Apple; safety research at NASA Ames and Boeing; Airline Transport Pilot and Certificated Flight Instructor; former college professor teaching aviation safety; and lots more.

- Contact information

PandaMediaBox

- Description

- The PandaMediaBox will contian a 7 inch TouchPanel and used to play and control audio and video media. The goal is to play media from san or nas, IPTV, webradio, usb dvb sticks, usb storage devices, bluetooth or even a vdr. For Audio it will contain an integrated amplifier and for video the user can choose between the integrated 7 inch Panel or the HDMI output. Inputs are all done over the touchscreen, additional it will be possible to control via a webinterface and a secure interface for e.g. smartphone applications.

- In particular the following targets will be developed, which can be used also in other projects.

-

- Easy to build small case with all connectors, place for a 7 inch inframe display, on/off switch and additional place for 80*100mm board for custom use.

- An easy to customize touch interface

- An easy to build and cheap audio amplifier with libs to control from the pandaboard

- Full Mediaplayer intergration into the touch interface

- Easy to customize webinterface for configuration and use

- Easy to customize Socket interface for various other devices, maybe even between PandaBoards

- All these can be used in other Projects. The PandaMediaBox will be designed to be very Modular so it should be possible to add nearly anything you can imagin.

-

e.g.

- Controll Light and Heater

- Use in kitchen with shopping list / reciept database

- Use itself as nas with usb hdd

- Use as router, or fallback device with usb 3g or lte modem

- The Project will also contain a community page and will be well documented so everyone can build an own PandaMediaBox.

- Distributed will be a system with all interfaces and seperate modules like the MediaPlayer. So the community can fast develop modules and distribute them.

- Installing the modules should be a one click solution on display / webinterface.

- Time frame

- 3 Months for the first useable Beta, 6 Months to include all features in a stable version

- Background & work by project submitter/s

- Student of computer science, have done work for an embedded sensor network and bigger software projects.

- Wiki/URL Links

- not available till now, community page will start with the project.

- Contact information

Helping interface for dependent people

- Description

- Hardware & Software

- Gesture/vocal interface to help person suffering from handicap (Blindness, paraplegia, quadriplegia) or elder peoples to keep independence:

- For different use schemes:

- - To locate: a speaking GPS telling location (street name) on request (button or speech recognition).

- - Read and tell (OCR and speech synthesis)

- - Speech recognition actuators

- - Gesture (eye movement or body movements) or speech commands for actuations

- - Status of remote or local sensors of equipped home

- - Communicate easily (voip and connected link thru wifi/GSM)

- Bringing all these features with the help of software:

- - Gesture integration, character read OCR: image sensor and image processing

- - Voice recognition / speech syntheses : audio processing

- - Actuators and sensor (Local : serial / Ethernet link; remote Bluetooth wifi)

- - Speech synthesis integrated for reading or locating

- - Communication VOIP (start, stop communication with predefined list of contact trigged by voice or gesture)

- I know all these functions already exist separately, but want to integrate all these features in one system with all the advantages of the pandaboard :

- - Small form factor

- - Low power consumption

- - Connectivity (video sensors, wifi Bluetooth…..)

- - Power for video and audio processing

- - Linux and OMAP Community

- - Get a fully integrated solution with one small board!!!

- - Pandas are peaceful and powerful bears!

- Time frame

- First hardware and software integration (within 6 month):

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - GPS (uart)

- - Webcam USB

- - Bluetooth sensor/actuator modules

- Software

- - Gesture recognition (hand, body movement) and set some linked action

- - Speech recognition and set some linked action

- - Speech synthesis

- - Actuator and sensors (got some Bluetooth/serial sensors and actuators)

- - Get street position with speech synthesis work

- Second hardware and software integration

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - Design of an extension board GPS (external module with uart), image sensor

- - Battery pack and case

- Software

- - Eye movement recognition and set some linked action

- - Develop a graphical interface for option settings for helpers

- - More integration with person environment (generally home for quadriplegic or old people)

- Background & work by project submitter/s:

- - Affected by persons suffering from handicap, elder peoples…

- - Already set up some helping electronic (sensors, actuators)

- - Want to develop a open community of helpers around pandaboard

- - Work in electronic engineering (software AND hardware !)

- - Developed electronic hardware devices (digital cameras, bluetooth sensors and actuators)

- - Got a master in signal (audio and image) processing: openCV is my best friend !

- - Developed linux driver for image sensors, PCI boards, LCD displays…

- - Got some gesture recognition working on my Ubuntu intel inside computer

- Wiki/URL Links

- Gesture (wayv) : [7]

- OpenCVwiki : [8]

- Speech recognition (cmu-sphinx) : [9]

- Text to speech (festival) : [10]

- GPS (navit) : [11]

- Contact information

3G Router

- Description

Hi,

So in short the use cases:

- 3G router - uses the wifi chip capability and usb ports - benefits to driver documentation.

- LAN router - uses the lan and wifi together - benefits to forum documentation

- Media streamer/decoder - use of the 1080p decode facility, hdmi and dvi outputs - benefits to driver and forum documentation

- Vehicle diagnostics - use of the serial port - entire new after market field of application

I explain some in detail below. New to all this but here is what I want to do at a minimum.

- Use the Pandaboard as a 3G/4G Broadband Router with Squid caching. Connection will be shared on WiFi as well as LAN

- Enclose suitably and mount in a vehicle and make a plan for powering the board

- For now the plan is a simple buck converter that does 12V to 5V. Can improve it by putting a delay accomodating for the car startup phase.

- Therefore, I can have WiFi in car for maps, gps, etc. Squid will help here for repeated locations.

- Should be portable so that i can regularly take out and use at home as well.

Software wise: I want to start off by solving this problem in ubuntu. That way i can post the steps to the forums/wiki pages. This process will enable me to see all the challenges with wifi drivers, compiling packages that are already existing for i386 but NOT arm. If need be I'll set up a launchpad ppa with additional stuff that i compile so that others can use the packages i've compiled for the board.

I want to then explore MeeGo and Android options. The board might be overkill for some of these but the advantage is that it can be quickly repurposed to do something else as the hardware will be capable for quite a while!

Other desired applications: While in car:

- Would like to see if i can hook it to the On Board Diagnostics port and make it do some logging?

- Hook maybe a camera to it somehow. Useful for fun random pictures :D

- Maybe get the interface onto my android phone somehow. VNC maybe?

- Maybe make it a car entertainment system?

While at home/office:

- Use as low power media center to play FullHD hopefully but at least 720p?

- Network switch/router as in car

- Use as a plain lan to wifi router when proxy settings cannot be specified on the user devices.

- Time frame

2 weeks for main 3G router part. I'll add more uses for it as I go along, so I guess no definite time frame for that.

- Background & work by project submitter/s

By profession I am a mechanical engineer. I work in MATLAB on Ubuntu Lucid. I have some experience running a basic linux server with a DC hub, samba file sharing, DHCP. I want to use that experience in executing this project that is ARM based. I regularly submit bug reports, and collaborate on forums to get answers and help others. I want to do this for making pandaboard development easier. I would basically be documenting my beginner's experience for somebody inexperienced in embedded computing.

- Wiki/URL Links

http://omiio.org/content/3g-router

I will create a wiki page asap. Nothing on the blog site for now.

- Contact information

[.]za

Amahi on Panda (PandAmahi :-) )

- Description

Aim is to port the Amahi Linux home server onto the PandaBoard. Amahi is a very easy to install and configurable home server.

A good overview of Amahi can be found at http://www.amahi.org/features

The current version of Amahi only runs on Fedora. Since the Fedora ARM version is missing quite some packages, the goal is to get Amahi on the PandaBoard running under Debian and/or Ubuntu. This involves quite some porting effort due to the differences in package management (rpm vs. deb), configuration file locations and structure (e.g. apache2.conf and /etc/sysconfig in Fedora and httpd.conf and various locations for configuration files on Debian).

End result should be a working Amahi system supporting most (if not all) Amahi packages (sabnzbd, ushare, bittorrent, mediawiki, greyhole, Ampache, ...).

A complete list can be found at http://www.amahi.org/apps?s=all

a feasibility study for the project has already been started (and until now lots of work, but no blocking issues have been identified).

All of the code will be released under GPL (the current code of Amahi is also under GPL).

- Time frame

approximately three months.

- Background & work by project submitter/s

I have about 25 years of experience in Unix/Linux programming (started with Unix V7 on MC68020). In the past I created my own home server for beagleboard. See http://elinux.org/BeagleBoard/James for details. For my previous employer I was also involved in realizing a prototype home server and in the implementation of a media player.

Rationale for moving away from James and towards Amahi is because Amahi is much more advanced when it comes to easy installation and user interface.

- Wiki/URL Links

Amahi has a website at http://www.amahi.org and a wiki at http://wiki.amahi.org. On this wiki a section for this project will be created.

- Contact information

fransmeulenbroeks at gmail dot com

Phidippus Mystaceus

- Description

-

- Eight legged walking robot. Brain is accomplished using Pandaboard and world sensing with omnidirectional camera plus high zoom camera(s) in front. Inspiration is taken from real spiders vision system http://australianmuseum.net.au/Uploads/Images/2151/toolkit_sight_jumpingvision.jpg

- Time frame

- This is recurring project with deadline set 2..4 months for to complete. In December we plan to use both (read on) robots on competition Robotex 2011.

- Background & work by project submitter/s

- Team members have strong IT and lesser robotics background. Members of Tartu University robotics lab.

- * does electronics and programming.

- * Kalle-Gustav Kruus is good in SolidWorks and mechanics.

- We are working on two robots. Because you ask for our background, I include details of both:

- * Robot with interchangeable driving platform (wheels or legs, not important) but packed with eight cameras feeding 8x60fps images to onboard PC. Images get processed in real time on NVIDIA GTS450 GPU.

-

- Old project was split into two because system with legs vibrates and this may destroy lot of expensive hardware. So, eight cameras plus GPU robot will be redone with wheels and legged robot comes next:

- * Legged robot with omnivision for Robotex 2011. We plan to use custom webcam with Sony 360 degrees lens hidden downside under body and lightweight computing system (Pandaboard!) to analyze input.

- We have hardware left from previous attempt:

-

- Our tests indicate, that Beagleboard C4 CPU is completely loaded when shooting single VGA image at 30fps and nothing left to process it after. Dual core Cortex A9 should perform better. Also Beagleboard lacks peripherals we need. EDIT: Seems OpenCV makes most of overload. Last week we got 400fps with CPU load under 1% when went to V4L direct camera access.

- Because of his physical properties (legs in front of view) robot is limited to see better image on stop. This makes it act like real animal - walk few steps, stop to make decicions, walk again. We expect this to be really funny side-effect.

- We publish as much information as possible, so anyone is free to reproduce anything from our work.

- Wiki/URL Links

- Old (slow etc) walking platform as we have it

- Robot preview (old type)

- Leg system in prototype phase

- Old SolidWorks design, etc http://digi.physic.ut.ee/mw/index.php/Spidertank

- Wiki where we just started to rebuild all information from old pages (old ones are inaccessible to outside world :() http://digi.physic.ut.ee/mw/index.php/Tiim_IT_Grupp

- Some more random photos about our past walker design in test:

- Lifting heavy weights

- --""--

- --""--

- Onboard computer box is empty. Testing cameras with laptop

- Just walking with wireless remote control

- Disassembled walker

- Contact information

Week 3 -- Projects from 3/14/11 - 3/18/11

Docking Station for In-Air Gesture User Input and 3D Pseudo-Holographic Display

- Description

- The project aims to develop a docking station for devices such as Pandaboard by adding an in-air gesture user interface and a pseudo-holographic 3D display to the embedded Linux platform. In pseudo-holographic display mode, the display connected to the Pandaboard via HDMI will project to a pseudo-holographic setup that uses the pyramid-shaped Pepper's ghost effect. The reason for such display is that people will start to love 3D and this is the simplest way to implement a naked eye 3D. Hand gestures which are inherently natural and intuitive will be captured by a depth camera from a distance and the data will be processed with the help of various 3D depth camera (Kinect) open source projects to provide a gestural user input to the applications that will be developed for various scenarios, as listed below:

- Media player (hand gestures for playing, pausing, volume control), essentially a gesture TV

- Photo browser (zooming, rotating, swiping to next)

- Gaming (motion control)

- Augmented reality application (panning, selecting), utilizing the RGB capture of the depth camera

- 3D model/scene browser, which will incorporate head tracking

Both the input and output processings requires intensive computing power and it will be possible with Pandaboard high speed symmetric multiprocessing together with the powerful graphics core and the multimedia accelerator. This project will be a glimpse into the future of mobile computing and hopefully will bring usefulness and conveniences to everyone in various scenarios such as entertainments, virtual shopping, health care, information kiosks, advertising, educations etc.

- Time frame

- 6 months

- Background & work by project submitter/s

- Multimedia University Faculty of Engineering lecturer for subjects such as Robotics & Automations, Embedded System Design and Engineering Mathematics.

- Android developer in the community CodeAndroid Malaysia focus on 3D, augmented reality and hardware such as robots. Giving presentations about Android in various national open source conferences and events.

- Supervising various Final Year Projects since 2008 related to 3D, natural user interface, augmented reality and Android.

- Involved in research projects and publications related to Wiimote and multi-touch tabletop.

- Wiki/URL Links

- Wiki page will be set up soon.

- A related blog post.

- Contact information

Electronic interface support for dependents

- Description

- Hardware & Software

- Gesture/vocal interface to help person suffering from handicap (Blindness, paraplegia, quadriplegia) or elder peoples to keep independence:

- For different use schemes:

- - To locate: a speaking GPS telling location (street name) on request (button or speech recognition).

- - Read and tell (OCR and speech synthesis)

- - Speech recognition actuators

- - Gesture (eye movement or body movements) or speech commands for actuations

- - Status of remote or local sensors of equipped home

- - Communicate easily (voip and connected link thru wifi/GSM)

- Bringing all these features with the help of software:

- - Gesture integration, character read OCR: image sensor and image processing

- - Voice recognition / speech syntheses : audio processing

- - Actuators and sensor (Local : serial / Ethernet link; remote Bluetooth wifi)

- - Speech synthesis integrated for reading or locating

- - Communication VOIP (start, stop communication with predefined list of contact trigged by voice or gesture)

- I know all these functions already exist separately, but want to integrate all these features in one system with all the advantages of the pandaboard :

- - Small form factor

- - Low power consumption

- - Connectivity (video sensors, wifi Bluetooth…..)

- - Power for video and audio processing

- - Linux and OMAP Community

- - Get a fully integrated solution with one small board!!!

- - Pandas are peaceful and powerful bears!

- Time frame

- First hardware and software integration (within 6 month):

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - GPS (uart)

- - Webcam USB

- - Bluetooth sensor/actuator modules

- Software

- - Gesture recognition (hand, body movement) and set some linked action

- - Speech recognition and set some linked action

- - Speech synthesis

- - Actuator and sensors (got some Bluetooth/serial sensors and actuators)

- - Get street position with speech synthesis work

- Second hardware and software integration

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - Design of an extension board GPS (external module with uart), image sensor

- - Battery pack and case

- Software

- - Eye movement recognition and set some linked action

- - Develop a graphical interface for option settings for helpers

- - More integration with person environment (generally home for quadriplegic or old people)

- Background & work by project submitter/s:

- - Affected by persons suffering from handicap, elder peoples…

- - Already set up some helping electronic (sensors, actuators)

- - Want to develop a open community of helpers around pandaboard

- - Work in electronic engineering (software AND hardware !)

- - Developed electronic hardware devices (digital cameras, bluetooth sensors and actuators)

- - Got a master in signal (audio and image) processing: openCV is my best friend !

- - Developed linux driver for image sensors, PCI boards, LCD displays…

- - Got some gesture recognition working on my Ubuntu intel inside computer

- Wiki/URL Links

- Gesture (wayv) : [12]

- OpenCVwiki : [13]

- Speech recognition (cmu-sphinx) : [14]

- Text to speech (festival) : [15]

- GPS (navit) : [16]

- Contact information

OpenMSR

- Description

OpenMSR is a Instrumentation, Control and Automation (ICA) application, which gives you the ability to control real things / devices in a fast an easy manner. I want to port the existing app to the Pandaboard, because it is a fast, low power hardware platform, best suited for ICA applications.

- Time frame

due to the fact that it is already ported to the ARM platform, I guess it is just a matter of a few days.

- Background & work by project submitter/s

I'm the main developer of the existing project and have done all work on myself.

- Wiki/URL Links

See the projects website at http://www.openmsr.org/

Internet Audio Broadcast Catcher/Downloader/Player?

- Description

- The project will be a command line daemon with a web API for user input, that will receive a list of RSS feeds from a user, parse the feeds, and depending upon user interaction, will download the episodes. The application will be written in the Vala language and will either use gstreamer for media playback or will wrap mplayer. A jquery based web interface will allow computers on the same network to control the application via a webbrowser and the web API will allow others to develop GUI applications to communicate with the daemon.

- Time frame

- As I would be working on this in my spare time, it would probably take a week or two.

- Background & work by project submitter/s

- A listing of projects that I have made is available at my website. Four of the projects are written in Vala, three utilize Gstreamer, and one parses XML. It should also be noted that I have some sweet sideburns.

- Wiki/URL Links

- A launchpad project will be created when I decide upon a decent name. (muttonchop has a nice ring to it.)

- Contact information

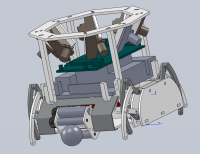

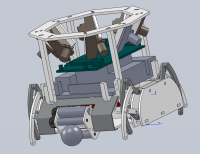

Football playing robot

- Description

- The project is to build an autonomous robot, which plays football with golf balls on 2m x 3m arena by rules of Robotex. Robotex is an annual open Estonian competition of robotics mainly between three largest informatics related universities: University of Tartu, Tallinn University of Technology and Estonian IT College. The goal of the competition is to raise popularity of exact sciences and computer science. Our team is one of participating teams from University of Tartu.

-

This year we are trying to build a smaller and faster robot than any of robots seen on this competition this far. Features of our new robot include:

- omnidirectional movement using brushless motors and self-designed omniwheels

- positioning using magnetic hall sensors on wheels and data from image processing

- localisation and mapping of target balls, goals and the opponent using self designed hyperbolic mirror based omnidirectional camera system

-

Goal of our team is to design a robot which fits to cylinder of 17cm diameter so it would also comply to size limits of Robocup SSL. That way the design and solutions could be used if our university should wish to compete there in the future. Due to the size constriction and the need to process video on-board Pandaboard with it's OMAP4 processor would be just right. Also we could make a great use of some other Pandaboard features including

- Wi-Fi for wireless debugging,

- chance to offload some video processing to DSP,

- direct communication with lower level electronics through extension port

- and possibly camera port if we should succeed in finding appropriate camera,

- passive cooling for simplicity

- ... so Pandaboard would be ideal :)

- Time frame

-

- In spring we are developing our mechanical design, testing and constructing electronics, writing and testing new software on our previous robot and simultaneously on the new platform - hopefully Pandaboard.

- In the beginning of summer we are building the parts by SolidWorks design and continuing software development.

- In autumn we test the whole design.

- Fully working robot must be ready for the competition in beginning of December 2011.

- Background & work by project submitter/s

- Our team has participated in the same event several times. Last year we designed our mirror system and reused our omnidirectional drive first used for Robotex 2008. So every year we use our old know-how and develop some new. Last time we used a laptop motherboard, but we can't use one this year as they are to large. All information of our last year robot is available in our Team Description Paper (so far only in Estonian, but don't hesitate to ask if you would like same additional info).

- We are all students of physics or computer architecture in University of Tartu. We are supervised and helped by mentors in club of robotics of our university. There are several other projects in the club for example designing 3*3*3cm robot swarm. Some of people in club have built and programmed robots more than ten years, so there is all the help we need.

- Wiki/URL Links

-

- Robotex page on webpage of our club

- Our last year log including some pictures

- blueprint of our last robot

- SolidWorks rendered image of field on the mirror

- of our image processing algorithms in action

- Contact information

- johu

ut.ee - mihkel.heidelberg

gmail.com

PandAmahi: Amahi on Panda

- Description

Aim is to port the Amahi Linux home server onto the PandaBoard. Amahi is a very easy to install and configurable home server.

A good overview of Amahi can be found at http://www.amahi.org/features

The current version of Amahi only runs on Fedora. Since the Fedora ARM version is missing quite some packages, the goal is to get Amahi on the PandaBoard running under Debian and/or Ubuntu. This involves quite some porting effort due to the differences in package management (rpm vs. deb), configuration file locations and structure (e.g. apache2.conf and /etc/sysconfig in Fedora and httpd.conf and various locations for configuration files on Debian).

End result should be a working Amahi system supporting most (if not all) Amahi packages (sabnzbd, ushare, bittorrent, mediawiki, greyhole, Ampache, ...).

A complete list can be found at http://www.amahi.org/apps?s=all

a feasibility study for the project has already been started (and until now lots of work, but no blocking issues have been identified).

All of the code will be released under GPL (the current code of Amahi is also under GPL).

- Time frame

approximately three months.

- Background & work by project submitter/s

I have about 25 years of experience in Unix/Linux programming (started with Unix V7 on MC68020). In the past I created my own home server for beagleboard. See http://elinux.org/BeagleBoard/James for details. For my previous employer I was also involved in realizing a prototype home server and in the implementation of a media player.

Rationale for moving away from James and towards Amahi is because Amahi is much more advanced when it comes to easy installation and user interface.

- Wiki/URL Links

Amahi has a website at http://www.amahi.org and a wiki at http://wiki.amahi.org. On this wiki a section for this project will be created.

- Contact information

fransmeulenbroeks at gmail dot com

Panda data-acquisition and FPGA dev board

- Description

Amateur scientists and other home experimenters often have difficulty finding data-acquisition (DAQ) hardware meeting their needs at reasonable cost. PC sound-card I/O is sometimes adequate; otherwise, commercial DAQ offerings in the sub-$200 range are quite limited. (In my case, the immediate motivation for this project is data collection from a magnetometer observing solar-activity-induced disturbances in the Earth's magnetic field.)

The Pandaboard is ideal for this application because it is inexpensive, low-power, portable, runs Linux, and offers easy high-bandwidth expansion through the GPMC bus. I propose designing an expansion board with

-

- multiple 16-bit A/D and D/A channels

- extra digital I/O

- programmable logic for timer/counter functions and periodic sampling

- GPS for mobile data-logging, timestamping, and oscillator calibration

- McSPI and GPMC bus interfaces at the Pandaboard expansion connectors

A Xilinx Spartan-6 FPGA will bridge the various functions with the Pandaboard interfaces. The design will be modular, such that the A/D, D/A, and GPS can be omitted, resulting in a board very similar to Eric Brombaugh's Beagleboard Tracker. Thus, this project will serve double-duty as a Tracker refresh for the Pandaboard.

- Time frame

Three months to a working hardware platform.

A draft schematic is ready. After a few weeks of refinement and peer review, PCB layout can begin next month. FPGA and software development for the GPMC interface may extend out to six months, depending on community involvement.

- Background & work by project submitter

I've been using Linux on PCs since 1994, and on ARM for about three years.

My first DAQ project was an 8-bit A/D and D/A plus dedicated timer chip, hand-wired on an ISA-bus prototyping card in high school. I enjoy dabbling with embedded microcontroller projects and building scientific instruments, like this aurora detector. I can hand-solder QFP packages. I also have a PhD in EE with an emphasis in signal processing.

- Wiki/URL Links

http://www.keteu.org/~haunma/proj/pandadaq/

- Contact information

haunma

CANalyzer - Embedded CANBUS Monitor and Display Project

Description

CANBus is the backbone for a lot of commutation in embedded hardware. I currently have 3 separate CANBus' within 20 feet of me that I am curious about:

A OBD-II/CANBus in my Van. Another OBD-II/CANBus in my Freightliner/Fleetwood 39' Motorhome. And another XANBus/CANBus based bus that controls the Solar charge controller/Inverter/Generator on my motorhome.

Ultimately I'd like to have a single system that I can control/monitor all 3 bus' at the same time.

Project goals:

1) Learn about CANBus and contribute what I learn and write back to the open source community.

2) Modularize the various parts so I can get something working quickly and still be able to go back and enhance things later.

3) To be able to sit in my bedroom in my motorhome and monitor all 3 Bus'. (e.g. Check Battery voltage levels, manually start the generator, see if someone opens the door on my van, etc).

Time frame

Phase I - 3 weeks

Create a open source library to passively sniff a connected CANBus. Save the data to Wireshark format files. Publish the library and notes about the 3 different bus'. Use as a basis to move on to the next phase.

Phase II - 4 weeks

Create open source library to handle/process higher level ODB-II messages.

Phase III- 4 weeks

Create a open source library to handle/process XANBus messages. There appears to be some information about this bus out there already.

Phase IV - 3 weeks

Displaying information/preliminary UI: Take the information from the first 3 phases and allow it to be viewable on a remote web browser. The OMAP platform will already be running Apache under Angstrom Linux. This phase will entail creating a daemon to collect the data from the bus' with the libraries and format that data into HTLM and save in the Apache directories. I can get something going quickly this way but I can also leverage open source utilities like the GNU plotting utilities to do some cool things.

Background & work by project submitter/s

I have 30+ years as a consultant/developer creating and maintaining hardware and protocol drivers under Unix/Linux.

Additional info:

I haven't decided on a CANBus adapter yet (many to choose from) but Panda/OMAP4 is the ideal platform for this project given its horsepower and the vast number of ports.

Contact information

TripLogger

- Description

- TripLogger is a device which can be mounted on or in a car dash and provides details about the car's current performance. It would interface to the car's onboard diagnostic plug, and would record and concurrently display current engine metrics. In addition to real-time diagnostic display, the carputer can also record and export trip information such as location (via GPS), mileage, duration, acceleration/braking characterstics, and other possible performance profiles. Once this data is offloaded (wirelessly or via SD), it could be used by commuters to create optimum travel routes, or by just about anyone who would want to obtain more information about their driving habits.

- Optional enhancements could include camera and sound recording. These could be used for possible vlogging or for taking snapshots along the trip. The driver could either trigger these manually or the TripLogger could be told to take pictures when certain conditions are met (stopped at intersection, passing some landmark, etc).

- Time frame

- Approximately 4 months to a demonstration prototype

- Background & work by project submitter/s

- Jennifer and Mark are a creative engineering team whose latest project work centers around home and family life. Jennifer is a creative artist with a degree in bio-chemistry and an enthusiasm for increasing her technology knowledge. Mark is a software architect by trade. With 20+ years as a professional software engineer, Mark has worked on or overseen software projects ranging from aircraft engine maintenance tracking to smart-home integration. Lately, Mark has partnered with his wife to work on embedded hardware platform solutions which integrate well in the home. Mark's previous work includes work on the OpenSolaris platform, various commercial projects, and co-authoring a book many moons ago.

- Wiki/URL Links

- Wiki page to follow soon

- Contact information

- storycrafter at gmail dot com

Mobile Planetarium and Classrom

- Description

-

The mobile planetarium is a portable device which has several key features:

- A handheld computer with touchscreen LCD for teacher or operator control

- A small (portable) projector (e.g. FAVI B1-LED-PICO Mini Pocket Projector, Business Edition)

- Control software which provides either a presentation interface (for general classroom use) or an astronomical guide for projecting star fields

- Armed with this device, instructors and teachers can create classroom lectures or act as star guides. In "offline" mode, the portable classroom acts largely as a general purpose computer, allowing an instructor to layout slide-shows or other media for presentation. In "classroom" mode, the device allows the instructor to interact with the LCD display and control previously created content (or, in case of the planetarium, input viewing parameters)

- Time frame

- Approximately 3 months to a demonstration prototype

- Background & work by project submitter/s

- Jennifer and Mark are a creative engineering team whose latest project work centers around home and family life. Jennifer is a creative artist with a degree in bio-chemistry and an enthusiasm for increasing her technology knowledge. Mark is a software architect by trade. With 20+ years as a professional software engineer, Mark has worked on or overseen software projects ranging from aircraft engine maintenance tracking to smart-home integration. Lately, Mark has partnered with his wife to work on embedded hardware platform solutions which integrate well in the home. Mark's previous work includes work on the OpenSolaris platform, various commercial projects, and co-authoring a book many moons ago.

- Wiki/URL Links

- Wiki page to follow soon

- Contact information

- storycrafter at gmail dot com

Week 4 -- Projects from 3/21/11 - 3/25/11

Docking Station for In-Air Gesture User Input and 3D Pseudo-Holographic Display

- Description

- The project aims to develop a docking station for mobile devices, which in this case is the Pandaboard, by adding an in-air gesture user interface and a pseudo-holographic 3D display to the embedded Linux/Android platform. In the pseudo-holographic display mode, the LCD/projector display connected to the Pandaboard via HDMI will be projected to a pseudo-holographic setup that uses the pyramid-shaped Pepper's ghost effect. The reason for such display is that this is the simplest way to implement a naked eye 3D and it can be enjoyed even by people with problem viewing stereoscopic 3D.

- Bare hand gestures will be used to interact with the pseudo-holographic 3D display as it is known to be natural and intuitive and the user is not required to be tethered to any hardware. The hand movements will be captured by a depth camera from a distance and the data will be processed with the help of various depth camera related open source projects (libfreenect, ofxKinect) to provide a gestural user input to the applications that will be developed in this project for various scenarios, as listed below:

- Media player (hand gestures for playing, pausing, volume control), essentially a gesture TV

- Realistic video calling/conferencing (hand gestures for calling, hanging-up, volume control)

- Photo browser (zooming, rotating, swiping to next)

- Gaming (motion control) similar to XBox Kinect games

- Augmented reality application (panning, selecting), utilizing the RGB capture of the depth camera with information overlay

- 3D model/scene browser, which will incorporate head tracking to simulate viewing from all sides

- Both the input and output processing requires intensive computing power, the display has to be re-rendered and mirrored to 3 additional sides and gesture recognition with a large library is a well-known heavy image processing task. This will be possible with Pandaboard with its high speed symmetric multiprocessing, the powerful graphics core and the multimedia accelerator. This project will be a glimpse into the future of mobile computing and hopefully it will bring usefulness and conveniences to everyone in various scenarios such as entertainments, virtual shopping, health care, information kiosks, advertising, educations etc.

- Time frame

- 4 months

- Background & work by project submitter/s

- Multimedia University Faculty of Engineering lecturer for subjects such as Robotics & Automations, Embedded System Design and Engineering Mathematics.

- Android developer in the community CodeAndroid Malaysia focus on 3D, augmented reality and hardware such as robots. Giving presentations about Android in various national open source conferences and events.

- Supervising various Final Year Projects since 2008 related to 3D, natural user interface, augmented reality and Android.

- Involved in research projects and publications related to Wiimote and multi-touch tabletop.

- Wiki/URL Links

- A related blog post .

- Contact information

Electronic interface support for dependents

- Description

- Hardware & Software

- Gesture/vocal interface to help person suffering from handicap (Blindness, paraplegia, quadriplegia) or elder peoples to keep independence:

- For different use schemes:

- - To locate: a speaking GPS telling location (street name) on request (button or speech recognition).

- - Read and tell (OCR and speech synthesis)

- - Speech recognition actuators

- - Gesture (eye movement or body movements) or speech commands for actuations

- - Status of remote or local sensors of equipped home

- - Communicate easily (voip and connected link thru wifi/GSM)

- Bringing all these features with the help of software:

- - Gesture integration, character read OCR: image sensor and image processing

- - Voice recognition / speech syntheses : audio processing

- - Actuators and sensor (Local : serial / Ethernet link; remote Bluetooth wifi)

- - Speech synthesis integrated for reading or locating

- - Communication VOIP (start, stop communication with predefined list of contact trigged by voice or gesture)

- I know all these functions already exist separately, but want to integrate all these features in one system with all the advantages of the pandaboard :

- - Small form factor

- - Low power consumption

- - Connectivity (video sensors, wifi Bluetooth…..)

- - Power for video and audio processing

- - Linux and OMAP Community

- - Get a fully integrated solution with one small board!!!

- - Pandas are peaceful and powerful bears!

- Time frame

- First hardware and software integration (within 6 month):

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - GPS (uart)

- - Webcam USB

- - Bluetooth sensor/actuator modules

- Software

- - Gesture recognition (hand, body movement) and set some linked action

- - Speech recognition and set some linked action

- - Speech synthesis

- - Actuator and sensors (got some Bluetooth/serial sensors and actuators)

- - Get street position with speech synthesis work

- Second hardware and software integration

- Hardware

- - Pandaboard

- - Headset /Microphone

- - Speaker

- - Design of an extension board GPS (external module with uart), image sensor

- - Battery pack and case

- Software

- - Eye movement recognition and set some linked action

- - Develop a graphical interface for option settings for helpers

- - More integration with person environment (generally home for quadriplegic or old people)

- Background & work by project submitter/s:

- - Affected by persons suffering from handicap, elder peoples…

- - Already set up some helping electronic (sensors, actuators)

- - Want to develop a open community of helpers around pandaboard

- - Work in electronic engineering (software AND hardware !)

- - Developed electronic hardware devices (digital cameras, bluetooth sensors and actuators)

- - Got a master in signal (audio and image) processing: openCV is my best friend !

- - Developed linux driver for image sensors, PCI boards, LCD displays…

- - Got some gesture recognition working on my Ubuntu intel inside computer

- Wiki/URL Links

- Gesture (wayv) : [17]

- OpenCVwiki : [18]

- Speech recognition (cmu-sphinx) : [19]

- Text to speech (festival) : [20]

- GPS (navit) : [21]

- Contact information

Alex mobile robotics platform

- Description

- The Alex platform is a small robot composed of the following parts:

- iRobot Create base

- 2 arms made of Bioloid parts

- 2 eyes on pan tilt mounts made of Nexus One phones and Bioloid parts

- A PandaBoard for central control

- Alex is part of a larger project to advance artificial intelligence through embodied ai. Check out the project website for details.

- Why Alex is Awesome

- Willow Garage had the right idea with the PR2, but the wrong pricepoint. Alex will be the PR2 for the rest of us. We're targeting a pricepoint just north of 1k, as opposed to 400k + for the PR2.

- There are thousands of robotics research labs reinventing robots that can move, see, hear, pick up things, make noise, and generally interact with their environment in useful ways. With Alex we plan to stop that cycle of reinvention, and start a cycle of innovation in software.

- Time frame

- Prototype in 3 months, first beta robots out the door by Christmas

- Why Alex will get done

- Over the last three months, I quit my job, completed an robotics internship at Anybots, and landed a new job in Artificial Intelligence powerhouse. I'm committed to spending significant personal resources on seeing Alex get to production quality and beyond. I've already committed thousands of dollars to support similar projects like the bilibot (bilibot.com). Eventually the Alex project will be a large consumer of PandaBoards, unfortunately you just can't buy one right now!l

- Alex and Panda

- The mobile robotics space is constrained by space, power, weight, and cpu. We can make use of the significant power available in mobile phones (see the cellbots project) but to provide a powerful central processing system that has room to grow you need a board with the feature set and efficiency of the PandaBoard. If we want to achieve untethered operation there's no better solution than Panda.

- Project Website

- embodiedai.pbworks.com

- Contact information

- iandanforth gmail com

PandaPLC

- Description

- The PandaPLC will consist of a PandaBoard and the surrounding hardware and software to produce an Open Hardware PLC. PLCs are the hardened industrial controllers used to run machines or automate processes in an industrial setting. They provide computing functions in an easy to use package usually with a graphical programming language that models logic with relay analogies. They generally provide rugged "real world" inputs and outputs for sensors and actuators of various types. They are widely used in industry and strongly proprietary. They are also quite primitive compared to PCs. This project aims to make use of the extremely high functionality of PandaBoard to provide functions not available or requiring expensive add ons to the PLC functions. And to make the both the hardware and software Open.

- The PandaBoard will connect to a backplane using SPI. The backplane will have 8 slots for function cards. Each card will provide either inputs or outputs using SPI peripheral chips and any necessary level shifting, scaling and protective functions. The backplane and cards will mount in a rack that will provide a PLC unit that can be mounted in a machine cabinet or other location. The backplane will provide power to the PandaBoard and IO cards. Since the hardware is quite an endeavor in my circumstances, I will partner with an existing OSS project that will provide software to run on top of the existing Linux ports.

- Time frame

- The backplane and plugin cards exist as designs with artwork and will be fabricated when I have a processor for dimensions and final details

- Development is on a shoestring so timing will depend on some events out of my control, but I anticipate being able to prototype this summer if not sooner. The software exists but will require modest porting for the embedded environment.

- Project goals

- To advance the state of the art in PLCs with superior networking, video capability, advanced math, machine vision, HMI, etc. as standard and provide an Open alternative to expensive proprietary PLCs and really expensive, Windows only programming tools. And to do these with low power, high volume, powerful, ARM processors to leverage the tremendous advances made for mobile devices.

- Background & work by project submitter/s

- I have worked with Linux automation for many years and designed and built Linux based automated test equipment. I have also worked with traditional PLCs and automation gear, taught electronics, and have been known to program in C and a few others. I founded an OSS automation tools project variously known as MAT/plc and Beremiz

- Wiki/URL Links

- Not Yet

- Contact information

Panda-media

- Description

- The pandamedia persues a dream of an completely FOSS on-top computer for gaming and multimedia.

- I dream that the awesomeness of an fanless singleboard computer such as the Pandaboard best fits this platform, and that the power of the Pandaboard is required for pushing the awsome out at 1080p.

- Unlike other set-top boxes it is preferable if the PandaMedia retains the ability to be used as a computer with installable applications.

- Another point is that because it is Open Source it ought to have remote-control applications and capabilities equal to, and beyond that of todays set-top boxes.

- Yet another point is that there should not be any need for unlocking it should in this respect be the anti-TiVo or anti-AppleTV.

- Time frame

- Ought to be finished by end of summer-break unless hindered by unforeseeable hindrances.

- Background & work by project submitter/s

- My skill lie in dedication and devotion beyond sensibility. Years of Linux usage (Ubuntu and Gentoo). Years of programming (C/C++). Helped detecting bugs in system several thousand concurrent users.

- Ad-hoc guru for the schools IT-department. Currently a student (don't know how the educational level translates to US/UK systems) with advanced math and physics. Also worth mentioning includes reading ALOT of stuff on Operating Systems, Computer History, Computer Science, and creator of SMIPL.

- Wiki/URL Links

- Not yet

- Contact information

TripLogger

- Description

- TripLogger is a device which can be mounted on or in a car dash and provides details about the car's current performance. It would interface to the car's onboard diagnostic plug, and would record and concurrently display current engine metrics. In addition to real-time diagnostic display, the carputer can also record and export trip information such as location (via GPS), mileage, duration, acceleration/braking characterstics, and other possible performance profiles. Once this data is offloaded (wirelessly or via SD), it could be used by commuters to create optimum travel routes, or by just about anyone who would want to obtain more information about their driving habits.

- Optional enhancements could include camera and sound recording. These could be used for possible vlogging or for taking snapshots along the trip. The driver could either trigger these manually or the TripLogger could be told to take pictures when certain conditions are met (stopped at intersection, passing some landmark, etc).

- Time frame

- Approximately 4 months to a demonstration prototype

- Background & work by project submitter/s

- Jennifer and Mark are a creative engineering team whose latest project work centers around home and family life. Jennifer is a creative artist with a degree in bio-chemistry and an enthusiasm for increasing her technology knowledge. Mark is a software architect by trade. With 20+ years as a professional software engineer, Mark has worked on or overseen software projects ranging from aircraft engine maintenance tracking to smart-home integration. Lately, Mark has partnered with his wife to work on embedded hardware platform solutions which integrate well in the home. Mark's previous work includes work on the OpenSolaris platform, various commercial projects, and co-authoring a book many moons ago.

- Wiki/URL Links

- Wiki page to follow soon

- Contact information

- storycrafter at gmail dot com

Mobile Planetarium and Classrom

- Description

-

The mobile planetarium is a portable device which has several key features:

- A handheld computer with touchscreen LCD for teacher or operator control

- A small (portable) projector (e.g. FAVI B1-LED-PICO Mini Pocket Projector, Business Edition)

- Control software which provides either a presentation interface (for general classroom use) or an astronomical guide for projecting star fields

- Armed with this device, instructors and teachers can create classroom lectures or act as star guides. In "offline" mode, the portable classroom acts largely as a general purpose computer, allowing an instructor to layout slide-shows or other media for presentation. In "classroom" mode, the device allows the instructor to interact with the LCD display and control previously created content (or, in case of the planetarium, input viewing parameters)

- Time frame

- Approximately 3 months to a demonstration prototype

- Background & work by project submitter/s

- Jennifer and Mark are a creative engineering team whose latest project work centers around home and family life. Jennifer is a creative artist with a degree in bio-chemistry and an enthusiasm for increasing her technology knowledge. Mark is a software architect by trade. With 20+ years as a professional software engineer, Mark has worked on or overseen software projects ranging from aircraft engine maintenance tracking to smart-home integration. Lately, Mark has partnered with his wife to work on embedded hardware platform solutions which integrate well in the home. Mark's previous work includes work on the OpenSolaris platform, various commercial projects, and co-authoring a book many moons ago.

- Wiki/URL Links

- Wiki page to follow soon

- Contact information

- storycrafter at gmail dot com

PandAmahi: Amahi on Panda

- Description

Aim is to port the Amahi Linux home server onto the PandaBoard. Amahi is a very easy to install and configurable home server.

A good overview of Amahi can be found at http://www.amahi.org/features

The current version of Amahi only runs on Fedora. Since the Fedora ARM version is missing quite some packages, the goal is to get Amahi on the PandaBoard running under Debian and/or Ubuntu. This involves quite some porting effort due to the differences in package management (rpm vs. deb), configuration file locations and structure (e.g. apache2.conf and /etc/sysconfig in Fedora and httpd.conf and various locations for configuration files on Debian).

End result should be a working Amahi system supporting most (if not all) Amahi packages (sabnzbd, ushare, bittorrent, mediawiki, greyhole, Ampache, ...).

A complete list can be found at http://www.amahi.org/apps?s=all

a feasibility study for the project has already been started (and until now lots of work, but no blocking issues have been identified).

All of the code will be released under GPL (the current code of Amahi is also under GPL).

- Time frame

approximately three months.

- Background & work by project submitter/s

I have about 25 years of experience in Unix/Linux programming (started with Unix V7 on MC68020). In the past I created my own home server for beagleboard. See http://elinux.org/BeagleBoard/James for details. For my previous employer I was also involved in realizing a prototype home server and in the implementation of a media player.

Rationale for moving away from James and towards Amahi is because Amahi is much more advanced when it comes to easy installation and user interface.

- Wiki/URL Links

Amahi has a website at http://www.amahi.org and a wiki at http://wiki.amahi.org. On this wiki a section for this project will be created.

- Contact information

fransmeulenbroeks at gmail dot com

Arch Linux for Panda

Porting Arch Linux to the PandaBoard, a continuation of what's already done over at PlugApps, where we ported to the SheevaPlug (armv5te) platform. Make X work as well, for a neat desktop computer experience.

Time frame:

Within 2 months of getting a PandaBoard. We have the package build system all ready for armv5te, so making a new repository for the PandaBoard wouldn't be a problem. We also have several multi-core cross-compiling machines.

Background & work by project submitter/s:

PlugApps is the wiki we run, with how-to guides for installing our port of Arch Linux on many devices. The forum and package repository are also great places to look. We have 3 full-time developers in IRC (#plugapps on Freenode) working on this.

Wiki/URL Links:

Contact information:

Low cost open hardware LCD solution for the pandaboard

- Description

The aim of the project is create an out of the box, low cost LCD solution (< U$100) for the pandaboard. The steps include the design and layout of a LCD adapter board, including final gerber files ready for PCB manufacture, a BOM of components used, modifying the Angstrom Linux kernel drivers to support the LCD and touch screen and provide these as an image and patches. Using the deliverables of this project it should be possible to make a low cost LCD solution for the pandaboard, and encourage the further enhancement of the product by the community. The New Haven Display 480x272 panel NHD-4.3-480272MF-ATXI-T-1 will be used.

- Time frame

Design, layout and respin PCBs: 4 weeks, Angstrom Linux kernel drivers 4 weeks

- Background & work by project submitter/s

I am a software developer working on embedded systems and have done a similar project for the Beagleboard (see images).

- Wiki/URL Links

Will be provided if project approved.

- Contact information

Football playing robot

- Description

- The project is to build an autonomous robot, which plays football with golf balls on 2m x 3m arena by rules of Robotex. Robotex is an annual open Estonian competition of robotics mainly between three largest informatics related universities: University of Tartu, Tallinn University of Technology and Estonian IT College. The goal of the competition is to raise popularity of exact sciences and computer science. Our team is one of participating teams from University of Tartu.

-

This year we are trying to build a smaller and faster robot than any of robots seen on this competition this far. Features of our new robot include:

- omnidirectional movement using brushless motors and self-designed omniwheels